SSIS – Part 4

SSIS Interview Questions and Answers for Experienced and Freshers

Here we are publishing series of posts on SSIS Interview questions and answers Part 4 for experienced and freshers. Below is the series 4.

Q. Difference between Union–all and Merge Join?

Ans:

- Merge transformation can accept only two inputs whereas Union all can take more than two inputs

- Data has to be sorted before Merge Transformation whereas Union all doesn’t have any condition like that.

Q. What is difference between Multicast and Conditional Split?

Ans:

The Multicast transformation distributes its input to one or more outputs. This transformation is similar to the Conditional Split transformation. Both transformations direct an input to multiple outputs. The difference between the two is that the Multicast transformation directs every row to every output, and the Conditional Split directs a row to a single output

Q. What is the difference between DTS and SSIS?

Ans:

Well, nothing except both the Microsoft SQL Server Products.

Even though both are the ETL tools, we can differentiate if you are asked observations.

| S.no | DTS | SSIS |

| 1 | Data Transformation Services | Sql Server Integration Services |

| 2 | Using Activex Script | Using Scripting Language |

| 3 | No Deployment wizard | Deployment wizard |

| 4 | Limited Set of Transformation available | Huge of Transformations available |

| 5 | Not Supporting BI Functionality | Completely supporting end to end process of BI |

| 6 | Single Tasks at a time | Multi Tasks run parallel |

| 7 | It is Un managed script | Managed by CLR |

| 8 | DTS can develop thru Enterprise manager | SSIS can thru Business Intelligence Development Studio (BIDS, nothing but new version of VS IDE) |

| 9 | We can deploy only at local server | It can be deployed using multiple server using BIDS |

| 10 | Designer contains Single Pane | SSIS designer contains 4 design panes: |

| a) Control Flow | ||

| b) Data Flow | ||

| c) Event Handlers & | ||

| d) Package Explorer. | ||

| 11 | No Event Hander | Event Handler Available |

| 12 | No Solution Explorer | Solution Explorer is available, with packages, connections and Data Source Views (DSV) |

| 13 | Connection and other values are static, not controlled at runtime. | It can be controlled dynamically using configuration |

Q. What is the difference between Fuzzy Lookup and Fuzzy Grouping?

Ans:

The Fuzzy Grouping task performs the same operations as the Fuzzy Lookup task but instead of evaluating input records against an outside reference table, the input set becomes the reference. Input records are therefore evaluated against other records in the input set and evaluated for similarity and assigned to a group.

Q. What’s the difference between Control Flow and Data Flow?

Ans:

Control Flow:

- Process Oriented

- Doesn’t manage or pass data between components.

- It functions as a task coordinator

- In control flow tasks requires completion (Success.,failure or completion)

- Synchronous in nature, this means, task requires completion before moving to next task. If the tasks are not connected with each other but still they are synchronous in nature.

- Tasks can be executed both parallel and serially

- Three types of control flow elements in SSIS 2005

- Containers:Provides structures in the packages

- Tasks: Provides functionality in the packages

- Precedence Constraints: Connects containers, executables and tasks into an ordered control flow.

- It is possible to include nested containers as SSIS Architecture supports nesting of the containers. Control flow can include multiple levels of nested containers.

Data Flow

- Streaming in nature

- Information oriented

- Passes data between other components

- Transformations work together to manage and process data. This means first set of data from the source may be in the final destination step while at the same time other set of data is still flowing. All the transformations are doing work at the same time.

- Three types of Data Flow components

- Sources: Extracts data from the various sources (Database, Text Files etc)

- Transformations: Cleans, modify, merge and summarizes the data

- Destination: Loads data into destinations like database, files or in memory datasets

Q. What is difference between For Loop and For Each Loop?

Ans:

A for loop will execute the tasks a specified number of times, in other words 10 times, or 25 times, and the number of times is specified in the definition of the container. You can use a variable to specify what that count is.

A for each loop will execute once for each item in the collection of items that it is looking at. A good example would be if users are putting an Excel file into a directory for import into the DB. You cannot tell ahead of time how many will be in the directory, because a user might be late, or there might be more than one file from a given user. When you define the ForEach container, you would tell it to execute for each *.xls in the directory and it will then loop through, importing each one individually, regardless of how many files are actually there.

Q. What is the difference between “OLEDB command” transformation and “OLEDB” destination in dataflow?

Ans:

The OLE DB Command is a pretty simple transformation that’s available within a Data Flow that can run a SQL statement that can insert, update, or delete records to, in, or from a desired table. It’s good to keep in mind that this transformation initiates a row-by-row operation, so you may experience some performance limitations when dealing with large amounts of data.

OLEDB destination can use Fast Load options hence perform bulk uploads.

Q. What is the Difference between merge and Merge Join Transformation?

Ans:

- Merge Transformation:

- The data from 2 input paths are merged into one

- Work as UNION ALL

- Metadata for all columns needs to be same

- Use when merging of data from 2 data source

- Merge Join Transformation:

- The data from 2 inputs are merged based on some common key.

- Work as JOIN (LEFT, RIGHT OR FULL)

- Key columns metadata needs to be same.

- Use when data from 2 tables having foreign key relationship needs to present based on common key

Q. What is the difference between “ActiveX Script” and “Script Task”?

Ans:

- We could say “Script Task” is the latest version for the deprecated feature “ActiveX Script”. Both are used to implement extended functionality in SSIS.

- ActiveX script supports VBScript and JScript where as “Script Task supports “VB.Net and C#.Net”.

- “Script Task” is preferable as “ActiveX Script” has been removed in MSSQL 2012.

- Script Task is supported with integrated help, IntelliSense, debugging and can reference external Dotnet assembles.

Q. What is the difference between “Script Task” and “Script Component”?

Ans:

- Both are used to extend the native functionality of SSIS.

- “Script Task” is to enhance the functionality for control flow where as “Script Component” is to enhance the functionality for Data flow.

- “Script Task” can handle the execution of parts of the package where as “Script Component” can handle the data flow and transformations by processing row by row.

Q. What is the difference between “Execute SQL Task” and “Execute T-SQL statement” Task?

Ans:

- The Execute T-SQL Statement task takes less memory, parse time, and CPU time than the Execute SQL task, but is not as flexible.

- If you need to run parameterized queries, save the query results to variables, or use property expressions, you should use the Execute SQL task instead of the Execute T-SQL Statement task

- Execute T-SQL Statement task supports only the Transact-SQL version of the SQL language

- Execute SQL task supports many connection types but the Execute T-SQL Statement task supports only ADO.NET

Q. What is the difference between “Data Conversion” and “Derived Column” transformations?

Data Conversion transformation is used o convert the datatype of a column. Same operation can be done using “Derived Column “transformation using typecast but derived column can also be used to add / create a new column by manipulating the existing column based on expressions.

We have to choose “Data Conversion” when the requirement is only intended to change the datatype. In other words “Data Conversion” is introduced just for developer convenience as it’s a direct method where as in “Derived Column” we have to use an expression to change the datatype of a column.

From 2008 in “Derived Column” transformation, datatype and length information is read only, when we create a new column or created from existing , data type would be assigned based on the expression outcome and the datatype is a read-only column.

To change the datatype we have to use “Data Conversion” transformation.

Q. What is the difference between “Copy Column” and “Derived Column”?

Both transformations can add new columns.

Copy column can add new columns only through existing columns but coming to Derived column it can add new columns without any help from existing columns.

Derived Column can assign different transformations and data types to the new columns whereas Copy Column cannot.

Derived Column supports error output whereas Copy Column cannot.

Q. What is the difference between UNIONALL and MERGE transformations?

The Merge transformation combines two sorted datasets into a single dataset. The rows from each dataset are inserted into the output based on values in their key columns.

The Merge transformation is similar to the Union All transformations. Use the Union All transformation instead of the Merge transformation in the following situations:

- The transformation inputs are not sorted.

- The combined output does not need to be sorted.

- The transformation has more than two inputs.

Q. What is the difference between for loop and for each loop container?

The “For Loop Container” executes specified number of times like 10 times, 20 times until the specified condition is met.

The “Foreach Loop Container” runs over an iterator. This iterator can be files from a folder, records from ADO, data from a variable etc.

Q. How to pass property value at Run time? How do you implement Package Configuration?

Ans:

A property value like connection string for a Connection Manager can be passed to the pkg using package configurations. Package Configuration provides different options like XML File, Environment Variables, SQL Server Table, Registry Value or Parent package variable.

Q. How would you deploy a SSIS Package on production?

Ans:

- Using Deployment Manifest

- Create deployment utility by setting its property as true.

- It will be created in the bin folder of the solution as soon as package is build.

- Copy all the files in the utility and use manifest file to deploy it on the Prod.

- Using import/Export and scheduling a job

Q. What are the new features added in SQL Server 2008 SSIS?

Ans:

- Improved Parallelism of Execution Trees

- .NET language for Scripting

- New ADO.NET Source and Destination Component

- Improved Lookup Transformation

- New Data Profiling Task

- New Connections Project Wizard

- DT_DBTIME2, DT_DBTIMESTAMP2, and DT_DBTIMESTAMPOFFSET data types

Improved Parallelism of Execution Trees: The biggest performance improvement in the SSIS 2008 is incorporation of parallelism in the processing of execution tree. In SSIS 2005, each execution tree used a single thread whereas in SSIS 2008, the Data flow engine is redesigned to utilize multiple threads and take advantage of dynamic scheduling to execute multiple components in parallel, including components within the same execution tree

.NET language for Scripting: SSIS 2008 is incorporated with new Visual Studio Tool for Application(VSTA) scripting engine. Advantage of VSTA is it enables user to use any .NET language for scripting.

New ADO.NET Source and Destination Component: SSIS 2008 gets a new Source and Destination Component for ADO.NET Record sets.

Improved Lookup Transformation: In SSIS 2008, the Lookup Transformation has faster cache loading and lookup operations. It has new caching options, including the ability for the reference dataset to use a cache file (.caw) accessed by the Cache Connection Manager. In addition same cache can be shared between multiple Lookup Transformations.

New Data Profiling Task: SSIS 2008 has a new debugging aid Data Profiling Task that can help user analyze the data flows occurring in the package. The Data Profiling Task can help users to discover the coerce of these errors by giving better visibility into the data flow.

New Connections Project Wizard: One of the main usability enhancements to SSIS 2008 is the new Connections Project Wizard. The Connections Project Wizard guides user through the steps required to create source and destinations.

DT_DBTIME2, DT_DBTIMESTAMP2, and DT_DBTIMESTAMPOFFSET data types – facilitate data type mapping to equivalent T-SQL date/time data types introduced in SQL Server 2008. Their primary purpose is to provide support for more accurate time measurements.

Q. What are Synchronies and Asynchronous transformations in SSIS?

Ans:

Synchronizes Transformations:

A synchronous transformation processes incoming rows and passes them on in the data flow one row at a time. Output is synchronous with input, it occurs at the same time. Therefore, to process a given row, the transformation does not need information about other rows in the data set. When a transform can modify the row in place so as to not change the physical layout of the result set, it is said to be a synchronous transformation. The output of a synchronous component uses the same buffer as the input and does not require data to be copied to a new buffer to complete the transformation. Reuse of the input buffer is possible because the output of a synchronous component usually contains the same number of records as the input;

An example of a synchronous transformation is the Data Conversion transformation. For each incoming row, it converts the value in the specified column and sends the row on its way. Each discrete conversion operation is independent of all the other rows in the data set.

Asynchronous Transformations:

The output buffer or output rows are not in sync with the input buffer; output rows use a new buffer. In these situations it’s not possible to reuse the input buffer because an asynchronous component can have more, the same or less output records than input records.

- The component has to acquire multiple buffers of data before it can perform its processing. An example is the Sort transformation, where the component has to process the complete set of rows in a single operation.

- The component has to combine rows from multiple inputs. An example is the Merge transformation, where the component has to examine multiple rows from each input and then merge them in sorted order.

- There is no one-to-one correspondence between input rows and output rows. An example is the Aggregate transformation, where the component has to add a row to the output to hold the computed aggregate values.

Asynchronous components can further be divided into the two types described below:

- Partially Blocking Transformation – the output set may differ in terms of quantity from the input set. Thus new buffers need to be created to accommodate the newly created set.

- Blocking Transformation – a transformation that must hold one or more buffers while it waits on one or more buffers, before it can pass that buffer down the pipeline. All input records must read and processed before creating any output records. For example, a sort transformation must see all rows before sorting and block any data buffers from being passed down the pipeline until the output is generated.

Note:

Synchronous components reuse buffers and therefore are generally faster than asynchronous components

Q. Any Idea About execution tree?

Ans:

At run time, the data flow engine breaks down Data Flow task operations into execution trees. These execution trees specify how buffers and threads are allocated in the package. Each tree creates a new buffer and may execute on a different thread.

Execution trees are enormously valuable in understanding buffer usage. They can be displayed for packages by turning on package logging for the Data Flow task

Q. Where are SSIS package stored in the SQL Server?

Ans:

- SQL Server 2000: MSDB..sysdtspackages

- SQL Server 2005: MSDB..sysdtspackages90

- SQL Server 2008: MSDB..sysssispackages

Stores the actual content and the following tables do the supporting roles.

- Sysdtscategories

- sysdtslog90

- sysdtspackagefolders90

- sysdtspackagelog

- sysdtssteplog

- sysdtstasklog

2008:

- sysssispackagefolders

- sysssislog

Q. How to achieve parallelism in SSIS?

Ans:

Parallelism is achieved using MaxConcurrentExecutable property of the package. Its default is -1 and is calculated as number of processors + 2.

Q. Differences between dtexec.exe and dtexecui.exe

Ans:

Both dtexec.exe and dtexecui.exe execute SSIS packages in the same manner. The difference is that dtexecui provided a graphical user interface to construct the command line arguments for dtexec. The command string that is generated with dtexecui can be used as command line arguments to dtexec.

Q. Demonstrate or whiteboard how you would suggest using configuration files in packages. Would you consider it a best practice to create a configuration file for each connection manager or one for the entire package?

Ans:

There should be a single configuration file for each connection manager in your packages that stores their connection string information. So if you have 6 connection managers then you have 6 config files. You can use the same config file across all your packages that use the same connections.

If you have a single config file that stores all your connection managers then all your packages must have contain the connection managers that are stored in that config file. This means you may have to put connection managers in your package that you don’t even need.

Q. Demonstrate or whiteboard using a loop in a package so each file in a directory with the .txt extension is loaded into a table. Before demonstrating this tell which task/container accomplishes this and which enumerator will be used.

Ans:

This would require a Foreach Loop using the Foreach File Enumerator. Inside the Foreach Loop Editor you need to set a variable to store the directory of the files that will be looped through. Next select the connection manager used to load the files and add an expression to the connection string property that uses the variable created in the Foreach Loop.

Q. What techniques would you consider to add notification to your packages? You’re required to send emails to essential staff members immediately after a package fails.

Ans:

This could either be set in the SQL Agent when the package runs or actually inside the package you could add a Send Mail Task in the Event Handlers to notify when a package fails.

There are also third party tools that can accomplish this for you (Pragmatic Works BI xPress).

Q. Have you used SSIS Framework?

Ans:

This is common term in SSIS world which just means that you have templates that are set up to perform routine tasks like logging, error handling etc. Yes answer would usually indicate experienced person, no answer is still fine if your project is not very mission critical.

Q. How many difference source and destinations have you used?

Ans:

It is very common to get all kinds of sources so the more the person worked with the better for you. Common ones are SQL Server, CSV/TXT, Flat Files, Excel, Access, Oracle, MySQL but also Salesforce, web data scrapping.

Q. What configuration options have you used?

Ans:

This is an important one. Configuration should always be dynamic and usually is done using XML and/or Environment Variable and SQL Table with all configurations.

Q. How do you apply business rules in SSIS (Transformations….Specific calculations but also cleansing)?

Ans:

Some people use SSIS only to extract data and then go with stored procedures only….they are usually missing the point of the power of SSIS. Which allow creating “a flow” and on each step applies certain rules this greatly simplifies the ETL process.

Q. Give example of handling data quality issues?

Ans:

Data Quality is almost always a problem and SSIS handles it very well. Examples include importing customers from different sources where customer name can be duplicates. For instance you can have as company name: SQL Server Business Intelligence but also SQL Server BI or SQL Server BI LTD or SQL Server BI Limited or inteligence (with one l). There are different ways to handle it. Robust and time consuming is to create a table with or possible scenarios and update it after each update. You can also use fuzzy grouping which is usually easy to implement and will make usually very good decisions but it is not 100% accurate so this approach has to be justified.

Other typical quality issues are nulls (missing values), outliers (dates like 2999 or types like 50000 instead of 5000 especially important if someone is adjusting the value to get bigger bonus), incorrect addresses and these are either corrected during ETL, ignored, re-directed for further manual updates or it fails the packages which for big processes is usually not practiced.

Q. When to use Stored Procedures?

Ans:

This one is very important but also tricky. ALL SSIS developers have SQL Server background and that is sometime not very good if they use SQL not SSIS approach.

Let’s start with when you typically use SPs. This is for preparing tables (truncate), audit tasks (usually part of SSIS framework), getting configuration values for loops and a few other general tasks.

During ETL extract you usually type simple SQL because it comes from other sources and usually over complication is not a good choice (make it dynamic) because any changes usually affect the package which has to be updated as well.

During Transformation phase (business rules, cleaning, core work) you should use Transformation tasks not Stored procedures! There are loads of tasks that make the package much easier to develop but also a very important reason is readability which is very important for other people who need to change the package and obviously it reduces risks of making errors. Performance is usually very good with SSIS as it is memory/flow based approach. So when to use Stored Procedures for transformations? If you don’t have strong SSIS developers or you have performance reasons to do it. In some cases SPs can be much faster (usually it only applies to very very large datasets). Most important is have reasons which approach is better for the situation.

Q. What is your approach for ETL with data warehouses (how many packages you developer during typical load etc)?

Ans:

This is rather generic question. A typical approach (for me) when building ETL is to. Have a package to extract data per source with extract specific transformations (lookups, business rules, cleaning) and loads data into staging table. Then a package do a simple merge from staging to data warehouse (Stored Procedure) or a package that takes data from staging and performs extra work before loading to data warehouse. I prefer the first one and due to this approach I occasionally consider having extract stage (as well as stage phase) which gives me more flexibility with transformation (per source) and makes it simpler to follow (not everything in one go). So to summarize you usually have package per source and one package per data warehouse table destination. There are might be other approach valid as well so ask for reasons.

Q. What is XMLify component?

Ans:

It is 3rd party free component used rather frequently to output errors into XML field which saves development time.

Q. What command line tools do you use with SSIS?

Ans:

dtutil (deployment), dtexec (execution), dtexecui (generation of execution code)

Q. What is data cleansing?

Ans:

Used mainly in databases, the term refers to identifying incomplete, incorrect, inaccurate, irrelevant, etc. parts of the data and then replacing, modifying, or deleting this dirty data.

Q. Any Idea what is ETI?

Ans:

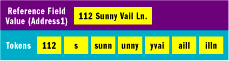

Yes! ETI (Error Tolerant Index) is a technique used in Fuzzy Lookup / Fuzzy Grouping for data cleansing operation. The ETI is a decomposition of the field values contained within a reference table of values into smaller tokens is nothing but a match index.

For example, instead of searching for a street address that contains the value “112 Sunny Vail Ln.”, smaller components of the reference value might be used, such as “sunn”, “nyva”, and “112”.

These individual words are called Tokens, and all tokens in a index are divided using some special character and search with the reference table.

Q. What is Fuzzy Lookup? Can you demonstrate it?

Ans:

Fuzzy lookup transformation is data cleaning task that helps to clean the incoming data with the reference table with the actual value. This transformation tries to find the exact or similar value as a result. The result data set is also depends on the fuzzy matching configuration in the fuzzy lookup transformation task. Fuzzy lookup task will be more helpful when you have data typo issues in the source data.

Fuzzy Lookup transformation creates temporary objects, such as tables and indexes in the SQL Server TempDB. So, make sure that the SSIS user account has sufficient access to the database engine to create and maintain this temporary table. Fuzzy lookup transformation has 3 features.

- Defining maximum number of matches to return to output – It starts with 1 and that is the recommended.

- Token delimiters – It has a set of predefined delimiters and we can also add our’s

- Similarity score – It is the fuzzy algorithm input to match the score with the input row and reference row. This value is between 0 and 1. higher the value is the accurate the result. It is usually 0.60 is the best value for similarity score.

Q. What shape would you use to concatenate two input fields into a single output field?

Ans:

Pivot transformation

Q. What is the Multicast Shape used for?

Ans:

The Multicast transformation distributes its input to one or more outputs. This transformation is similar to the Conditional Split transformation. Both transformations direct an input to multiple outputs. The difference between the two is that the Multicast transformation directs every row to every output, and the Conditional Split directs a row to a single output

Q. What types of things can I pass between packages in SSIS?

Ans:

We can pass Variables primarily between packages. Within a variable we can pass them as any type that is available. So if you were to create an object variable, although memory consuming, we could potentially pass a table that is in memory. Granted, in SQL Server 2012 (Denali) this is much, much easier now with parameters. Actually, this was almost a relief in a way. Configuring packages to consume parent variables was a time consuming and in some cases, confusing situation when many variables were in the process.

Q. How to accomplish incremental loads? (Load the destination table with new records and update the existing records from source (if any updated records are available)

Ans:

There are few methods available:

- You can use Lookup Transformation where you compare source and destination data based on some id/code and get the new and updated records, and then use Conditoional Split to select the new and updated rows before loading the table. However, I don’t recommend this approach, especially when destination table is very huge and volume of delta is very high.

- Use Execute SQL Task and with Staging table

- Find the Maximum ID & Last ModifiedDate from destination and store in package variables. (Control Flow)

- Pull the new and updated records from source and load to a staging table (A dataload table created in destination database) using above variables.(Data Flow)

- Insert and Update the records using Execute SQL Task (Control Flow)

- Use the feature CDC (Change Data Capture) from SQL Server 2008

- Use Conditional split to split data for Inserts. Updates and Deletes

- For inserts redirect to a OLEDB Destination

- For Updates and Deletes redirect using a OLEDB Command transformation

Q. How can you enable the CDC for a table?

Ans:

To enable CDC to a table first the feature should be enabled to the corresponding database. Both can be done using the below procs.

exec sys.sp_cdc_enable_db_change_data_capture

sys.sp_cdc_enable_table_change_data_capture

Q. How can you debug Dataflow?

Ans:

Microsoft Integration Services and the SSIS Designer include features and tools that you can use to troubleshoot the data flows in an Integration Services package.

- SSIS Designer provides data viewers.

- SSIS Designer and Integration Services transformations provide row counts.

- SSIS Designer provides progress reporting at run time.

- Redirect to specified points using error output

Q. How to debug control flow?

Ans:

- Integration Services supports breakpoints on containers and tasks.

- SSIS Designer provides progress reporting at run time.

- Business Intelligence Development Studio provides debug windows.

Q. What can you tell me about Ralph Kimball?

Ans:

Ralph Kimball is an author on the topic of data warehousing and BI. He has been regarded as one of the original architects of data warehousing. Kimball has always had the firm belief that data warehouses should fast and understandable. Oh, and he developed this whole methodology of dimensional modeling. There is that. (It’s also probably a good idea to know the basic idea and structure of dimensional modeling)

Q. Are you familiar with Package Configurations?

Ans:

Yes. Recently I was working on a project where we used the SQL Server Table package configuration to store values for the package parameters. That allowed me to build a GUI for the users to update the package variables each month with new values.

Q. Have you ever used the XML package configuration?

Ans:

Yes. In fact, that is the method we use for storing the connection string used by the sql server table package configuration for the project I just mentioned. We have a dev/production environment, so using an xml file with the connection string (and pointing to that XML file from an environment variable) makes it easy to switch between the two servers.

References:

For more MSBI stuff please have a look at below references:

http://blogs.msdn.com/b/business-intelligence

https://sreenivasmsbi.wordpress.com

http://www.msbiguide.com

http://msbiravindranathreddy.blogspot.in

http://sqlschool.com/MSBI-Interview-Questions.html

https://www.katieandemil.com

http://www.venkateswarlu.co.in

http://www.sqlserverquest.com

http://www.msbiguide.com

thanks for your nice post good one!

[…] SSIS – Part 4 […]