Implicit Conversion Performance Impact

in SQL Server

This post can help you to understand Implicit Conversion Performance Impact in SQL Server. First let’s understand the implicit conversion and then we’ll see how it impacts the performance.

Q. Why Conversion Required?

Ans:

Data conversion has to be occurred whenever we need to compare data with two different datatypes. This comparison happens based on the data type precedence, lower precedence data types will always be implicitly converted up to the higher precedence type.

Q. What are the types of conversions?

Ans:

There are two types of data conversions:

Explicit Conversion: When you explicitly convert data using data conversion functions CAST or CONVERT is known as explicit conversion. This conversion is clearly visible to the user.

Ex: WHERE Col1 = CAST($698.4 AS VARCHAR(10));

Implicit Conversion: SQL Server internally converts data from one data type to another. This conversion can’t be visible to the user. For example an INT type column is compared with TINYINT type column then SQL Server internally converts TINYINT to INT as INT have the higher precedence than TINYINT.

— Col1 – TINYINT and Col2 INT

EX: WHERE Col1 = Col2

Q. What is datatype Precedence?

Ans:

Datatypes are formed in a hierarchy in which each datatype is assigned to a priority. When an operator combines two expressions of different data types, the rules for data type precedence specify that the data type with the lower precedence is converted to the data type with the higher precedence. If the conversion is not a supported implicit conversion, an error is returned. Here is the precedence hierarchy.

Q. What is the impact of Implicit Conversion?

Ans:

Implicit conversion may not impact the data conversion with the tiny data sets but when it comes to the operations on huge dataset it negatively impacts the performance.

- Implicit Conversion increases the CPU usage

- It might cause the INDEX SCAN where an Index Seek would have been possible without the implicit conversion.

- Impact the overall execution time and slow down the query, we can clearly see the difference when we operate it with the huge datasets

Q. Can you take an example and show me how implicit conversion impacts the performance?

Ans:

We are using AdventureWorks2014 in Development SQL Instance

Create a sample table and insert data:

USE [AdventureWorks2014] GO CREATE TABLE Sales_Test ( OrderID INT IDENTITY(1,1) NOT NULL, ProductID VARCHAR(50) NOT NULL, OrderQty SMALLINT NOT NULL, UnitPrice MONEY NOT NULL ) --Create a Clustered index CREATE CLUSTERED INDEX CL_IX_OrderID ON Sales_Test(OrderID); --Insert Data into the table from the existing table for 5 times using GO 5 INSERT INTO Sales_Test SELECT ProductID, OrderQty, UnitPrice FROM SALES.SalesOrderDetail GO 5

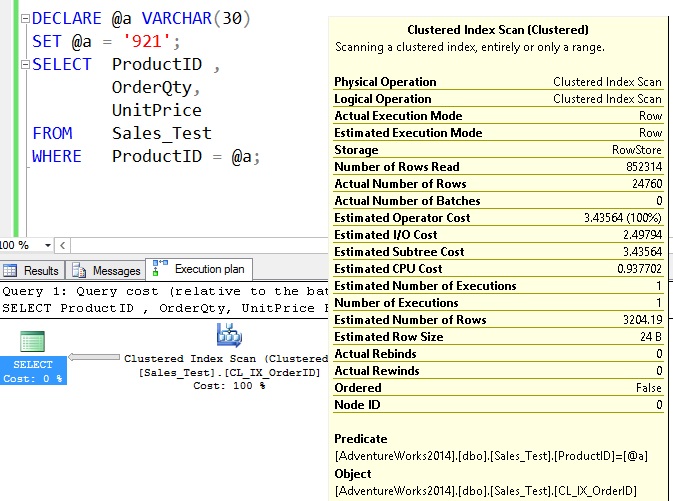

Select query to fetch a specific product details. We are using a parameterized query with the parameter of type VARCHAR.

DECLARE @a VARCHAR (50)

SET @a = ‘921’;

SELECT ProductID ,

OrderQty,

UnitPrice

FROM Sales_Test

WHERE ProductID = @a;

Query Execution Statistics:

Table ‘Sales_Test’. Scan count 1, logical reads 3383, physical reads 0, read-ahead reads 349, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

SQL Server Execution Times:

CPU time = 31 ms, elapsed time = 209 ms.

Observations:

- Data types compared: VARCHAR & VARCHAR. Thereof no data conversion required

- CPU Time: 31 ms

Clear the cache

DBCC DROPCLEANBUFFERS GO DBCC FREEPROCCACHE GO

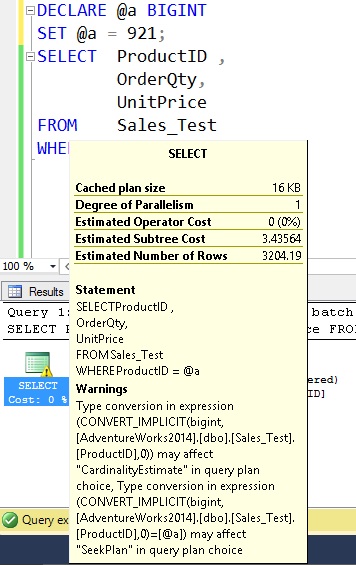

Select query to fetch a specific product details. We are using a parameterized query with the parameter of type BIGINT.

DECLARE @a BIGINT

SET @a = 921;

SELECT ProductID ,

OrderQty,

UnitPrice

FROM Sales_Test

WHERE ProductID = @a;

Query Execution Statistics:

Table ‘Sales_Test’. Scan count 1, logical reads 3383, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

SQL Server Execution Times:

CPU time = 109 ms, elapsed time = 225 ms.

Observations:

- Data types compared: VARCHAR & BIGINT.

- You can see the WARNING in SELECT operator

- Since BIGINT has the highest precedence the comparison required converting VARCHAR data to BIGINT. Since “ProductID” is VARCHAR ,if the table is having 1 million rows then one million times the implicit operation has to be occurred that means it should convert each row value from VARCHAR to BIGINT and then compare the value with @a.

- CPU Time: 109 ms which almost 3 times higher than precious execution

Summary:

- “Implicit conversions” is one of the top issues when you talk about performance tuning in SQL Server

- Best thing is we can always prevent / fix the implicit conversion issues.

- Implicit conversion might causes the INDEX SCAN when INDEX SEEK is possible

- It may not affect in initial days but it starts increasing CPU usage when data continues to increase.

- Especially concentrate on queries written in application code, parameterized queries and stored procedures.

- Add the rule that “Avoid Implicit Conversion” in developer best practices list

- From my experience I have seen (tuned) queries when implicit conversion happens between data types NVARCHAR & VARCHAR, DATE & DATETIME, BIGINT & VARCHAR, BIGINT & INT etc.